Your executive team just approved a significant AI investment. You've evaluated platforms, sat through demos that looked incredible, and selected a vendor whose workflow builder makes agentic AI seem almost... easy. The sales engineer showed you how their system automated a complex approval process in 15 minutes. Your board is asking when you'll see ROI.

Then it hits you: Who on your team is actually going to build this?

The Pressure is Real

The competitive landscape has shifted dramatically in just 12 months. Enterprise AI spending exploded from $11.5 billion in 2024 to $37 billion in 2025 - a 3.2x increase. Ninety-four percent of organizations now use AI, and 91% of technology decision-makers are increasing IT budgets, with AI initiatives driving over half of that growth.

Your competitors aren't experimenting anymore. They're deploying. OpenAI reports that ChatGPT Enterprise weekly messages increased 8x over the past year, with "frontier firms" generating twice as many AI interactions per employee as the median enterprise. The message is clear: organizations that operationalize AI and are not just "using AI" are pulling ahead.

You need to move. The question is: with whom?

The Complexity They Don't Show in Demos

Here's what happens after the vendor leaves. Your team gets access to the platform, and suddenly those 15-minute demos stretch into weeks of questions:

- "How do we integrate this with our ERP system?"

- "The API documentation says it supports webhooks, but which webhook format does our CRM actually send?"

- "Why did the agent work perfectly in testing but start producing errors when we connected it to our production database?"

- "It ran fine for three weeks, then failed overnight when a third-party service changed its authentication method. How do we even debug this?"

These aren't hypothetical. Recent research shows that only 31% of AI use cases reach full production, double the rate from 2024, but still meaning that more than two-thirds stall out. McKinsey found that over 80% of organizations report no meaningful impact on enterprise-wide EBIT from their AI initiatives.

Why? Because building production agentic AI systems isn't like deploying SaaS. Demos run on clean test data with predictable inputs. Production runs on messy reality.

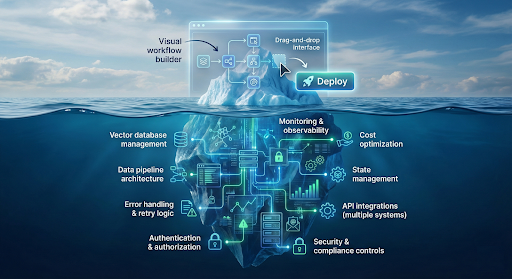

And here's what the drag-and-drop workflow builder doesn't show you: real agentic AI systems are architectural endeavors, not configuration exercises. That visual workflow is just the surface layer. Underneath, you're orchestrating:

- Vector databases storing embeddings that need to be kept fresh

- RAG pipelines pulling from multiple data sources with different schemas

- Model routing logic deciding which LLM to use when

- Error handling for when external APIs fail (and they will)

- Monitoring and observability for systems that make autonomous decisions

- Security controls ensuring agents can't access data they shouldn't

- State management across multi-step workflows

- Cost optimization so your LLM bill doesn't spiral

The workflow builder helps you describe what you want. Actually making it work in production requires building and maintaining complex infrastructure.

The AI Implementation Iceberg: What demos show vs. what production actually requires

The Shortage No One Mentions in Sales Calls

Here's the uncomfortable truth: there aren't enough people who know how to do this work.

The White House Council of Economic Advisers reported that 36% of AI-related jobs in the U.S. remain unfilled. Job postings requiring AI skills grew 257% from 2015 to 2023 - nearly five times the 52% overall job growth rate. AI Engineers now command average base salaries of $204,000, compared to $92,000 for traditional Computer Engineers.

A 2025 survey found that 46% of technology leaders cited AI skill gaps as their biggest obstacle to implementation. Job postings for emerging AI roles skyrocketed nearly 1,000% between 2023 and 2024.

And before you think, "We'll just upskill our existing team" or "hire fresh talent" - understand what production agentic AI actually requires.

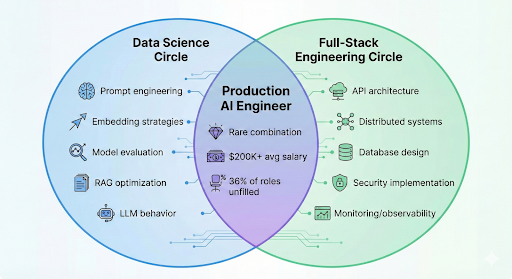

The Hybrid Skill Set Nobody Has

Building production agentic AI systems requires a rare combination: data science expertise AND full-stack engineering capability. You need people who can:

On the data science side:

- Design effective prompts and understand LLM behavior under different conditions

- Build and maintain vector embeddings that actually improve retrieval

- Evaluate model outputs and tune systems based on real usage patterns

- Understand when you're seeing a data quality issue versus a model limitation

- Implement proper evaluation frameworks - because traditional software testing doesn't work here

On the engineering side:

- Architect distributed systems that can scale

- Build robust APIs that handle failures gracefully

- Implement proper authentication, authorization, and data access controls

- Set up monitoring and observability for systems making autonomous decisions

- Design data pipelines that keep information flowing reliably

- Debug production issues across multiple systems and third-party services

The rare intersection: AI Engineers need both data science intuition and engineering discipline

This isn't "learn data science OR full-stack development." It's both. Your data scientist who's brilliant at training models probably hasn't built a production API that handles 10,000 requests per second. Your full-stack engineer who can architect microservices probably doesn't understand embedding drift or prompt injection attacks.

The market responded to this gap with a flood of training programs. They promise to "transition" software engineers or data analysts into AI roles in 12-16 weeks. Here's what they don't tell you.

Why Training Programs Aren't Solving This

These programs optimize for completion rates and certificates, not for producing people who can actually build, debug, and deploy production AI systems.

AI is inherently difficult. There are no beginner-friendly shortcuts. Most graduates from these programs finish knowing terminology; they can explain what RAG is, discuss attention mechanisms, and recognize framework syntax. But they freeze the moment something breaks in production. When data looks wrong. When agents start behaving unpredictably. When costs spike unexpectedly. When the system that worked perfectly in testing fails in ways you never anticipated.

That's because theory-heavy courses teach about AI rather than forcing students to do AI under real constraints. Real confidence comes from failing repeatedly:

- Agents that loop infinitely because you didn't anticipate a specific edge case

- Embedding pipelines that worked for 10,000 documents but break at 100,000

- Monitoring dashboards showing your agent made 50,000 LLM calls overnight (hello, $15,000 surprise bill)

- Debugging sessions at 2 AM trying to figure out why your agent's behavior changed after an upstream data source modified their schema

The gap between training program outcomes and production requirements

AI Engineer has never been an entry-level role. It assumes you're already solid at programming, data structures, algorithms, and systems architecture. It requires the judgment that only comes from experience - knowing when a problem is architectural versus when it's data quality, understanding which metrics actually matter for your use case, recognizing when you need to rethink your approach versus just tune parameters.

Self-learning is absolutely possible, but it takes months of grinding through the fundamentals of both data science and software engineering, then more months building things that don't work at first. Many times. If someone is telling you they can "transition you into AI engineering" in three months, they're selling comfort, not competence.

Reality Check: At Strongly, we've had customers come to us after hiring "AI Engineers" straight out of bootcamps. Smart people, enthusiasm off the charts, certificates from reputable programs, and often strong academic credentials from top universities. They were great at building the basics - end-to-end pipelines on clean data where the initial model results hit a sweet spot. But when the problem wasn't one they'd seen before, when models returned poor results, or when they needed to deploy into production, the cracks started to appear.

The analogy I like to use is from my days playing guitar. The player who learned by downloading tabs and noodling over pentatonic scales sounds amazing jamming on the acoustic by the campfire. But put them in a band with a singer who needs a song tuned down a key, or ask them to solo over chord changes more complex than a basic I-IV-V progression, and they don't even know where to start. The foundation - built through years of late-night experiments, failed gigs, and playing with musicians better than you - creates calluses of intuition that shortcuts can't replicate. They lack the ability to pull from a broad repertoire of patterns, to recognize when something sounds wrong before they can articulate why, to improvise when the plan falls apart.

It's the same with AI engineering. When an agent starts hallucinating edge cases you never tested, when your embedding quality degrades after a schema change, when costs spike because your retry logic created an infinite loop - bootcamp graduates often freeze. They've learned the notes, but they haven't learned to play.

The "entry-level AI jobs" flooding LinkedIn like data annotators, prompt engineers, content writers are adjacent roles, not the engineers you need to architect and deploy production agentic systems.

Platform + People + Process

The successful organizations we work with understand a fundamental equation:

Platform + Experienced People + Proven Process = Production Success

Take all three seriously, or watch your AI initiative stall alongside the majority still stuck in pilot mode.

The Platform provides the foundation, offering critical capabilities: the workflow builder, model access, integration, and security controls. Choose carefully, but know that even the best platform is just infrastructure. It gives you the building blocks, not the building.

The People bridge the gap between what the platform can theoretically do and what your organization actually needs. They've navigated production deployments before. They know how to architect agentic systems that scale. They've debugged enough production AI systems to recognize patterns: "Oh, this looks like embedding drift" or "Your RAG pipeline is probably retrieving irrelevant context" or "You're hitting rate limits on your LLM provider." They have both the data science intuition and the engineering discipline to build systems that work reliably.

They understand that the first implementation is really just a well-informed prototype. The real work is iterating based on production behavior like which edge cases matter, how users actually interact with the system, and where the bottlenecks emerge under load.

The Process ensures knowledge transfer, not dependency. Your internal team needs to understand not just how the system works, but why it was architected that way. What happens when they need to extend it? When business requirements change? When do you need to add a new data source? When do you need to explain to auditors how your AI reaches decisions? When that third-party API inevitably breaks?

At StronglyAI, this plays out every week. Organizations come to us with a platform selected, a timeline approved, and a vision for what they want their agentic AI to do. What they're missing is the layer that actually gets systems from that visual workflow diagram into reliable production: Forward Deployed Engineers who've built production AI systems in complex enterprise environments.

These aren't fresh bootcamp graduates or consultants who deliver architecture diagrams and leave. They're practitioners with years in production AI environments. They are the ones who've been paged at 3 AM, who've debugged agents making unexpected decisions, who've optimized LLM costs from $50,000/month to $8,000/month, who know the difference between what works in a demo and what survives in production.

They embed with your internal teams, write code alongside your developers, document not just the "what" but the "why," and transfer the institutional knowledge that doesn't exist in any training program. They bring both the data science expertise to make AI systems effective and the engineering discipline to make them reliable. When they leave, your team can actually run and evolve these systems.

Start With Reality

If you're a CIO or technical leader planning your AI strategy, ask yourself these questions:

- Do you have engineers on staff who've deployed production AI systems? Not coursework, not side projects but actual agentic systems processing real business transactions, making autonomous decisions, and handling edge cases you never anticipated?

- Does your team have BOTH data science and full-stack engineering capability? Can they architect a RAG pipeline AND build the API layer that serves it? Can they evaluate model performance AND implement proper monitoring?

- Can your team debug an AI agent that works in testing but fails in production? Do they understand token limits, context windows, embedding quality, prompt injection attacks, data drift, and cost optimization?

- When the system makes a decision nobody expected (and it will), can your team trace back through the logs to understand why? Do they have the observability infrastructure in place to even see what happened?

- If you're planning to "upskill your way there," are you prepared for the 12-18 month timeline it actually takes to develop production AI competence? Do you have that much runway before competitors pull ahead?

If you're answering "no" or "I'm not sure," you're not alone. But you need a plan. The competitive pressure to implement AI is real and accelerating. The complexity of getting it right is equally real and far more than any drag-and-drop interface suggests. And the talent to bridge that gap is both scarce and expensive.

The organizations winning aren't the ones with the biggest AI budgets or the fanciest workflow builders. They're the ones who've honestly assessed their capability gaps and filled them with experienced people who have both the data science intuition and engineering discipline this work actually requires.

Your platform is ready. Is your team?

Assess Your AI Implementation Readiness

We're offering complimentary 30-minute assessment calls where we help you identify capability gaps and build a realistic path to production.

Schedule Your Assessment