Introduction

Large Language Models (LLMs) have transformed the landscape of AI applications, but their effectiveness heavily depends on the quality of the prompts they receive. Strongly.AI has developed a groundbreaking agent system that leverages the power of customizable agent chains to optimize prompts, ensuring maximum performance and efficiency in LLM interactions.

The Strongly.AI Approach to Prompt Optimization

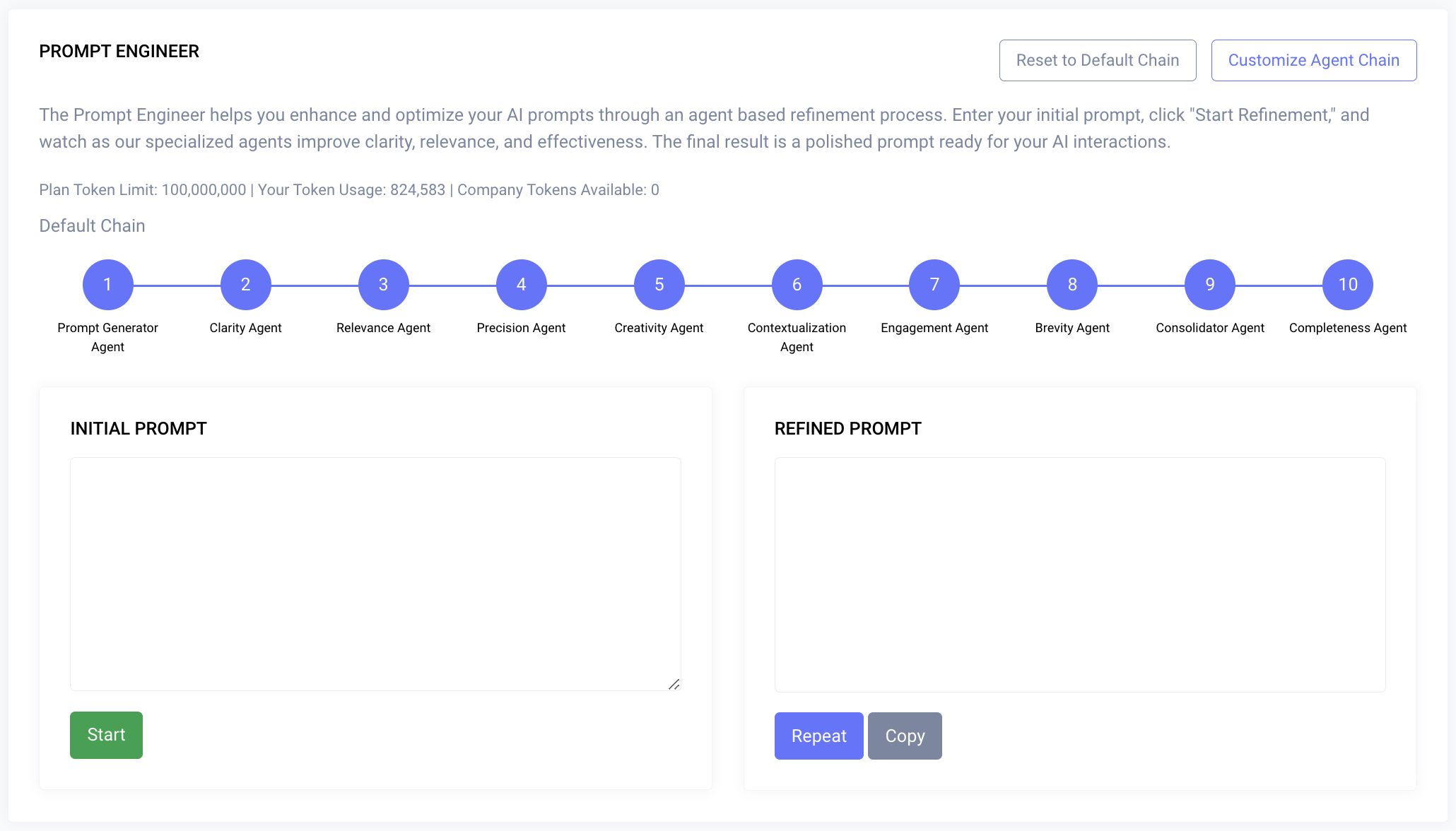

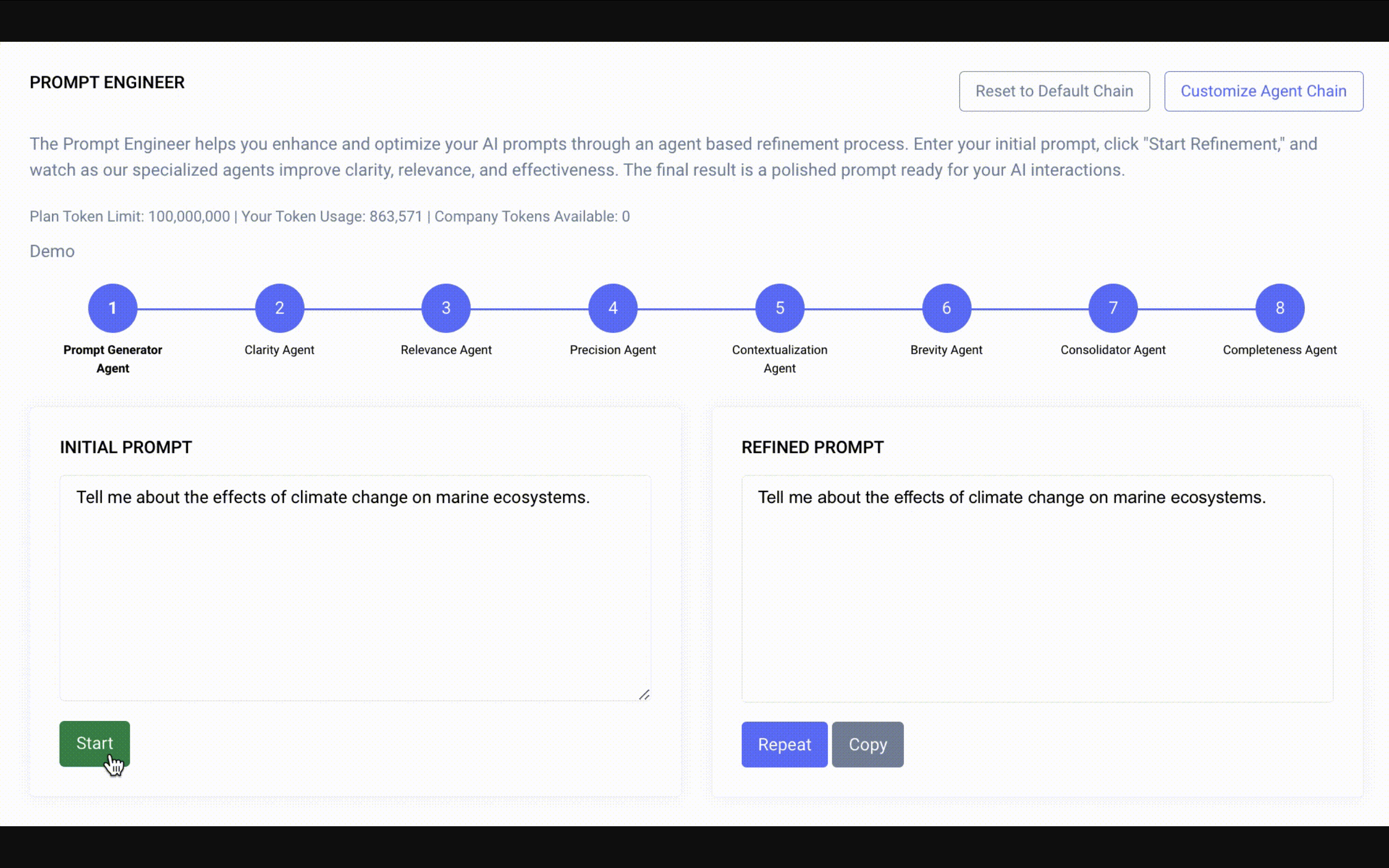

Strongly.AI's innovative approach utilizes a chain of specialized agents, each designed to refine specific aspects of a prompt. This multi-stage optimization process ensures that the final prompt is not only effective but also tailored to the unique requirements of each task.

Key Feature: Users have the flexibility to create custom agents and chains, allowing for unprecedented adaptability to specific use cases and industries.

The Prompt Optimization Agent Chain

Strongly.AI provides a set of pre-configured agents, including:

- Clarity Agent: Enhances the prompt's clarity, eliminating ambiguities and ensuring the language is straightforward.

- Relevance Agent: Analyzes the prompt to ensure it's directly relevant to the intended task or query.

- Precision Agent: Refines the prompt to elicit precise and accurate responses from the LLM.

- Creativity Agent: Introduces elements that encourage more creative and diverse outputs.

- Engagement Agent: Optimizes the prompt to maintain the LLM's engagement throughout interactions.

- Brevity Agent: Condenses the prompt to its most essential elements.

- Consolidator Agent: Integrates refinements, ensuring cohesion and resolving conflicts.

- Completeness Agent: Performs a final check to ensure comprehensive coverage.

Custom Agent Creation

Users can create their own specialized agents to address unique requirements. For example:

- Domain-Specific Agents: Tailored for particular industries or fields

- Style Agents: Optimize prompts for specific writing styles or brand voices

- Localization Agents: Adapt prompts for different cultural contexts and languages

- Compliance Agents: Ensure adherence to regulatory requirements or company policies

The Prompt Optimization Process

- Initial Prompt Input: User provides an initial prompt for optimization.

- Agent Chain Configuration: Selection of pre-configured or custom agents.

- Sequential Agent Processing: Prompt passes through each agent in the sequence.

- Iterative Refinement: Additional passes may be initiated for further refinement.

- Final Verification: Ensures the optimized prompt meets all required criteria.

- Output: Fully optimized prompt ready for use with chosen LLM.

Technical Innovations in Strongly.AI's Agent System

- Flexible Chain Architecture: Easy integration of custom agents and reconfiguration of sequences.

- Inter-Agent Learning: Agents share insights, continuously improving chain performance.

- Adaptive Optimization Paths: Dynamic adjustments based on input prompt characteristics.

- Prompt Version Control: Maintains history of prompt iterations for comparison and understanding.

- API Integration: Seamless incorporation into existing workflows and applications.

Use Case: Optimizing a Research Query Prompt

Initial Prompt:

Final Optimized Prompt:

Analyze climate change effects on marine ecosystems:

- Ocean temperature: Increase since 1900, 2100 projection, impact on biodiversity (name 3 species with shifted ranges).

- Ocean acidification: Current pH, change rate since industrialization, effect on coral reefs (global decline percentage, most vulnerable system).

- Sea level rise: Annual increase over last decade, impact on coastal habitats (quantify mangrove loss in one region).

- Altered ocean currents: Gulf Stream changes in last 50 years, influence on fish populations (North Atlantic cod stock changes since 1950).

Conclude with two evidence-based predictions for marine food webs and global fisheries by 2050, considering cumulative effects and potential tipping points in marine ecosystems.

Custom Chain Example: Legal Document Analysis

A law firm could create a custom agent chain for analyzing legal documents:

- Legal Terminology Agent: Ensures correct legal language usage.

- Jurisdiction-Specific Agent: Focuses on relevant laws for a specific jurisdiction.

- Case Law Agent: Incorporates references to relevant precedents.

- Client-Specific Agent: Tailors the prompt to the client's particular situation.

- Confidentiality Agent: Ensures exclusion of privileged information.

The Future of Prompt Optimization with Strongly.AI

As LLM technology continues to advance, Strongly.AI is committed to evolving our prompt optimization system. Items we are considering for the future:

- A marketplace for sharing and discovering community-created custom agents

- Advanced analytics for understanding and improving custom agent performance

- Integration with specific LLM architectures for tailored optimization

- Real-time prompt adjustment based on LLM output analysis

- Multi-modal prompt optimization for text-to-image and other emerging AI models

Conclusion

Strongly.AI's customizable Agent System for prompt optimization represents a significant leap forward in maximizing the potential of Large Language Models. By providing a flexible framework that allows users to create and integrate custom agents, we've created a robust, adaptable system that ensures every interaction with an LLM is as effective and efficient as possible.

Whether you're using our pre-configured agents or building a highly specialized chain, Strongly.AI's innovative approach to prompt optimization will play a crucial role in unlocking the full capabilities of language models across a wide range of applications and industries.