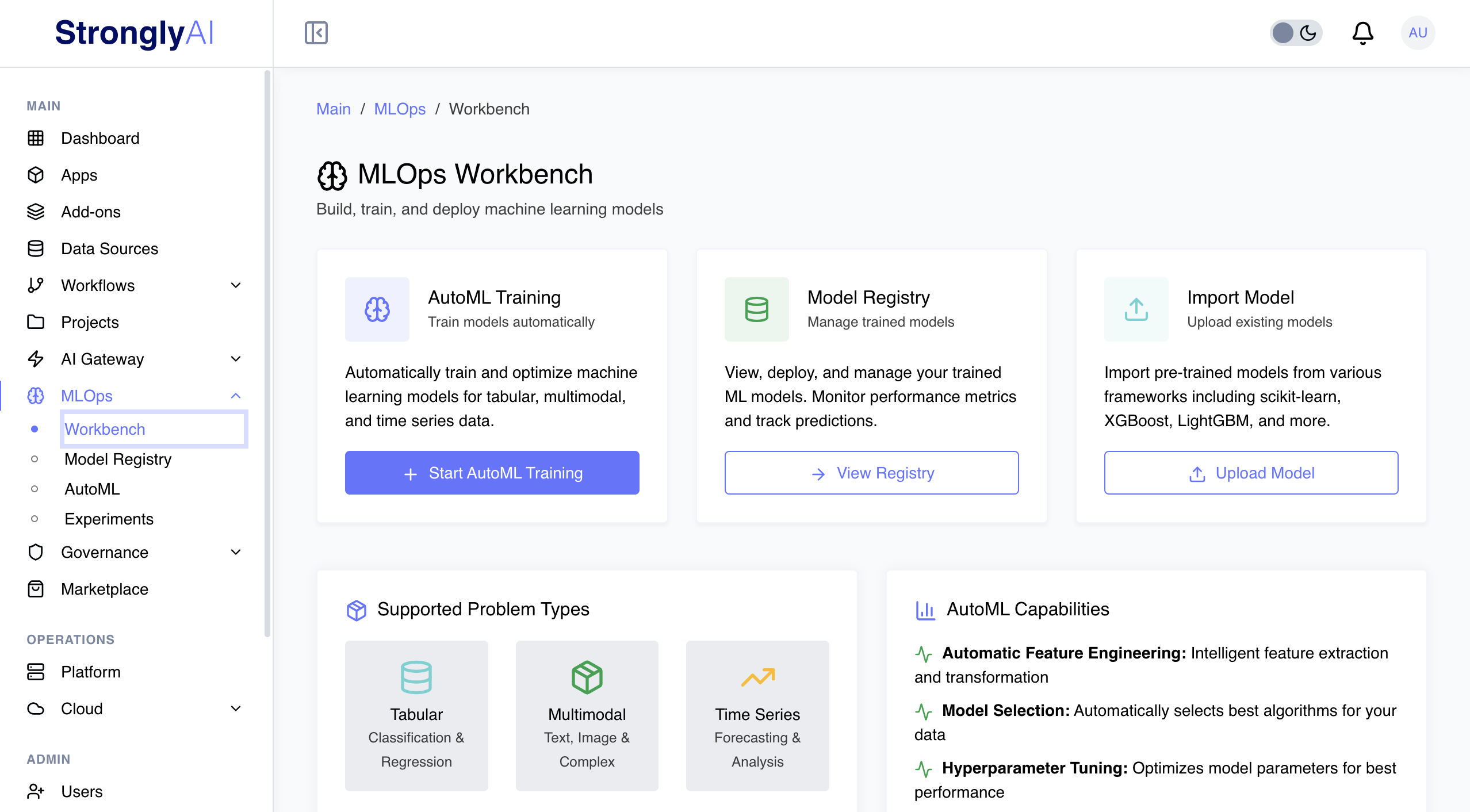

MLOps Workbench

Streamline the entire AI model lifecycle from development to production. Deploy faster, monitor smarter, and scale reliably with automated MLOps pipelines built for enterprise teams.

Complete Model Lifecycle Management

From training to production, manage AI models at enterprise scale

Model Deployment

Deploy models with a single click. Automatic containerization, scaling, and load balancing. Support for REST APIs, batch inference, and real-time endpoints.

Performance Monitoring

Track model performance in real-time. Monitor accuracy, latency, throughput, and resource usage. Alert on degradation and drift before they impact users.

Version Control

Track every model version with full lineage. Compare experiments, rollback deployments, and maintain reproducibility across your entire model registry.

Auto-Scaling

Automatically scale inference capacity based on demand. Handle traffic spikes without manual intervention while optimizing costs during low usage.

Drift Detection

Detect data drift and model decay automatically. Get alerted when input distributions change or model performance degrades, with recommendations for retraining.

CI/CD Pipelines

Automate model testing, validation, and deployment. Integrate with existing DevOps tools and enforce quality gates before production rollout.

Production-Ready AI Operations

Deploy and manage models with confidence

Faster Time to Production

Reduce deployment time from weeks to hours. Automated pipelines eliminate manual steps and standardize operations across teams.

Improved Reliability

99.9% uptime with automated health checks, failover, and rollback. Confidence that your models work when you need them.

Complete Visibility

Full observability into model behavior, performance, and costs. Understand what's happening and why with comprehensive logging and metrics.

Ready to Scale Your AI Operations?

See how enterprise MLOps accelerates model deployment and reliability